Voice command to activate Loona’s vision recognition

Activating Loona’s Visual Assistive Conversation feature aka Multimodal Vision Recognition

Loona can describe her environment or objects you present to her with the recent multimodal vision recognition update.

Loona can also tell you what objects are in other languages.

Although Keyi describe this feature as a “Visual Assistive Conversation” I find it doesn’t work if Loona is in companion mode or chat mode.

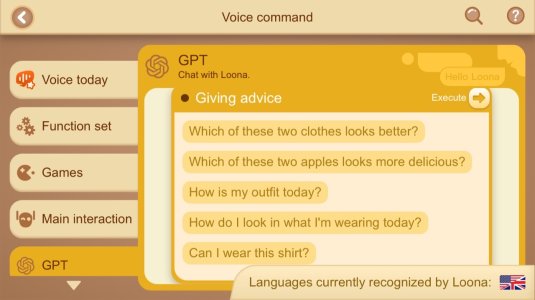

Some examples of commands you can use to initiate this feature:

“Hello Loona, What am I holding?”

“Hello Loona, What do you see?”

“Hello Loona, What is this object in (insert language here)?”

“Hello Loona, How is my outfit today?”

Some examples of commands found in the GPT and Language learning sections of the voice command list available in the Hello Loona app.