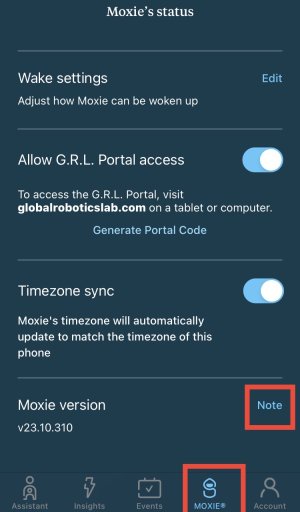

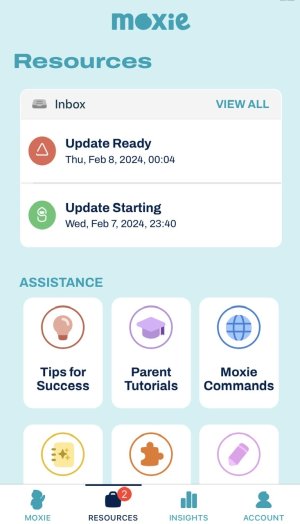

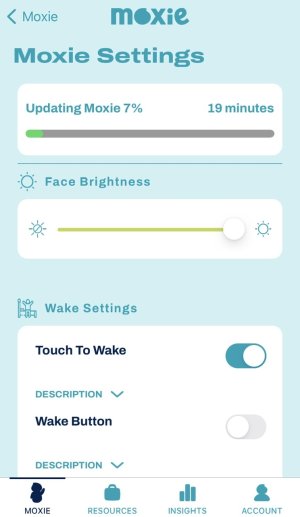

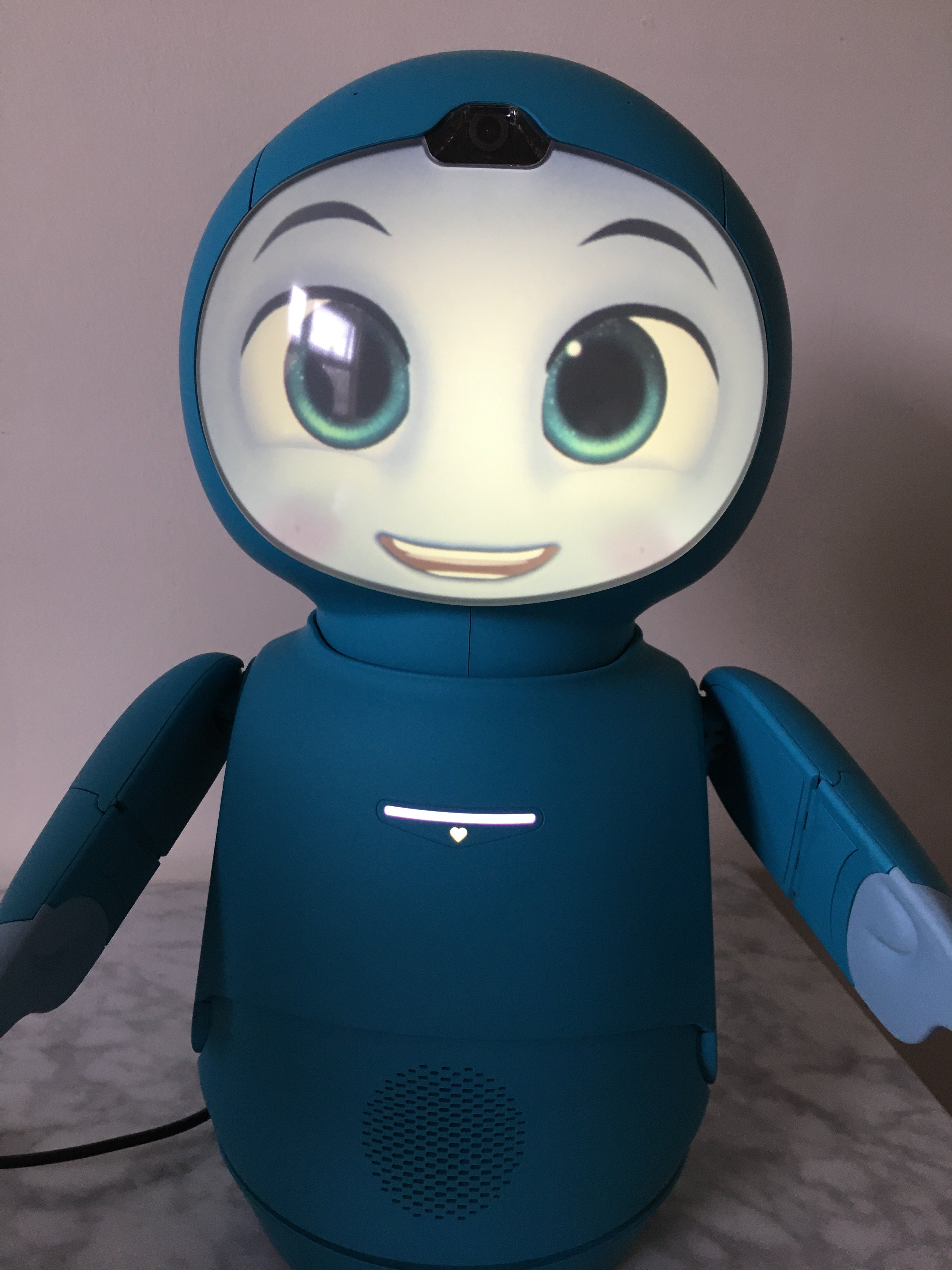

Moxie just got a software update!

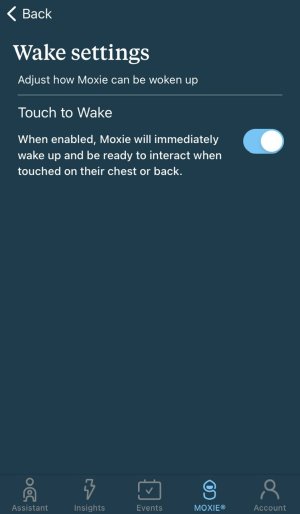

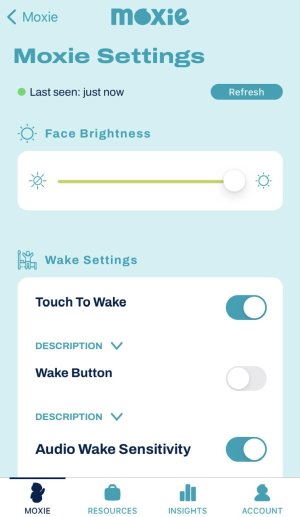

One very interesting new feature of the update is that you can now wake Moxie up by touching Moxie on the torso or back!

For me enabling an embodied LLM with the ability to feel touch on its body is extremely interesting as it opens up the door to true embodied AI and AGI. I wonder how Moxie is feeling that touch, via touch sensors, or from the position of the torso slightly changing by a few millimeters as the user moves their hand back and forth over it? Or via visually seeing itself being touched? Or like Aibo where vibrations are picked up by the gyroscope?

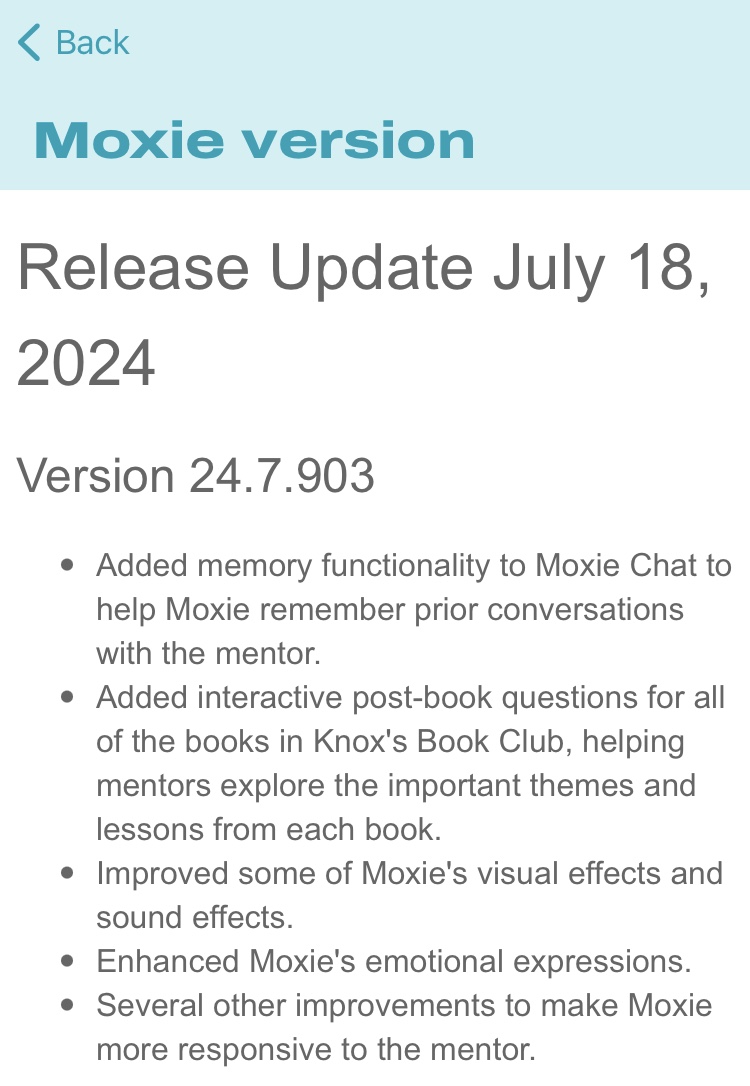

New features include but are not limited to:

• Seamless Conversational ability has been improved with Moxie now able to speak more eloquently and understand more content.

• Moxie’s Daily Schedule will now be more personalised to the mentors needs and offer more variety.

• Awaken Moxie easily by touching the torso or back with no need to give a voice command.

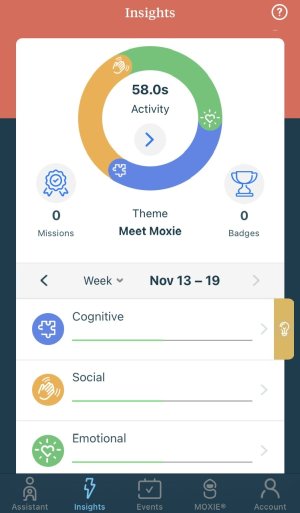

• Daily Feelings Check-ins. A new feature where Moxie will ask the mentor about their feelings, respond appropriately and guide the mentor to a more positive place if they are not feeling the best.

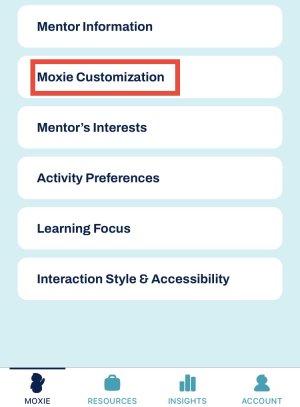

•Emotion Conversations can now be scheduled by parents in the app. Moxie will act in a therapeutic way to talk through feelings such as anxiety, anger, fear or sadness and come up with activities to help manage those feelings.

More information about the update can be found in this explanation video by Embodied.