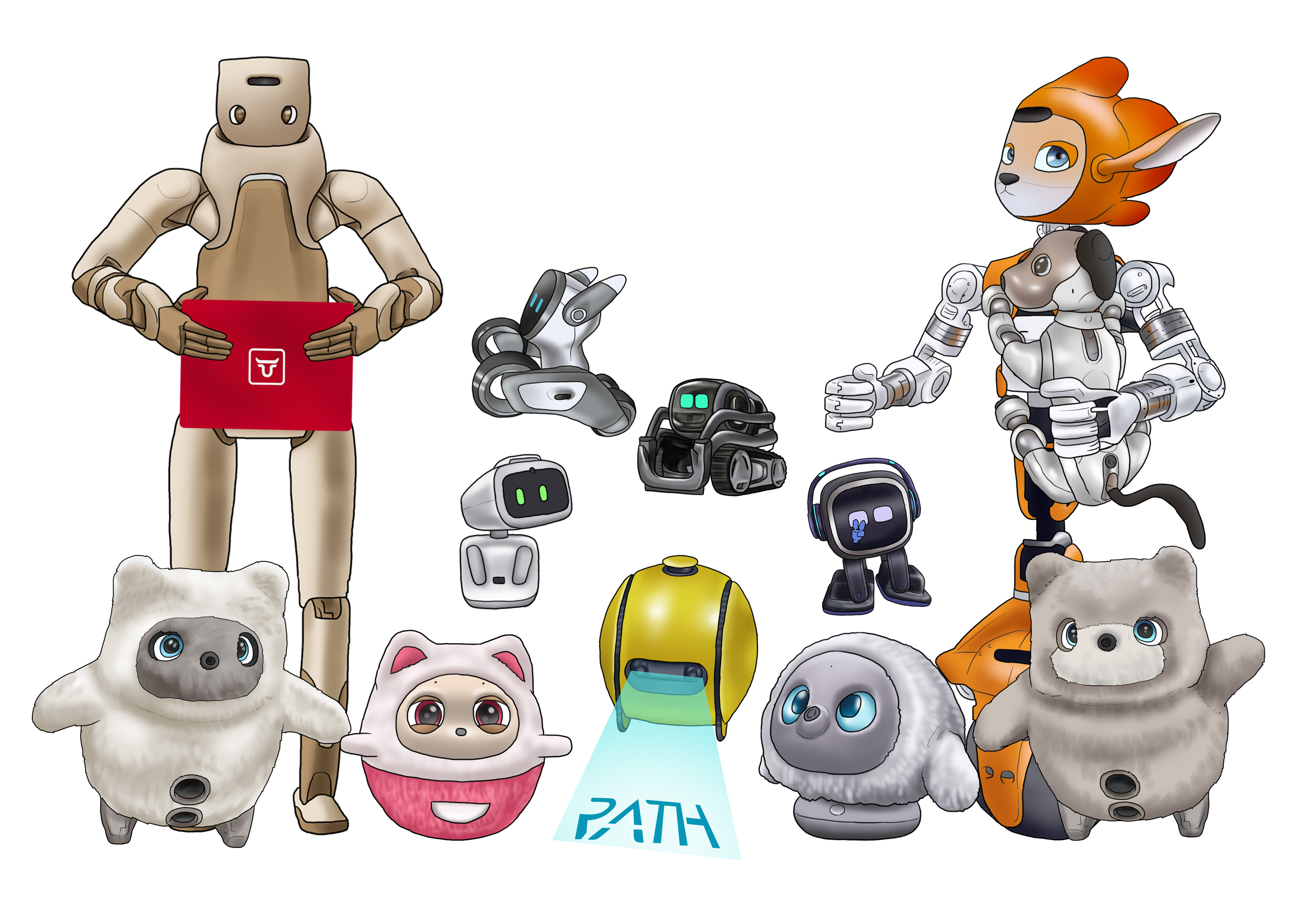

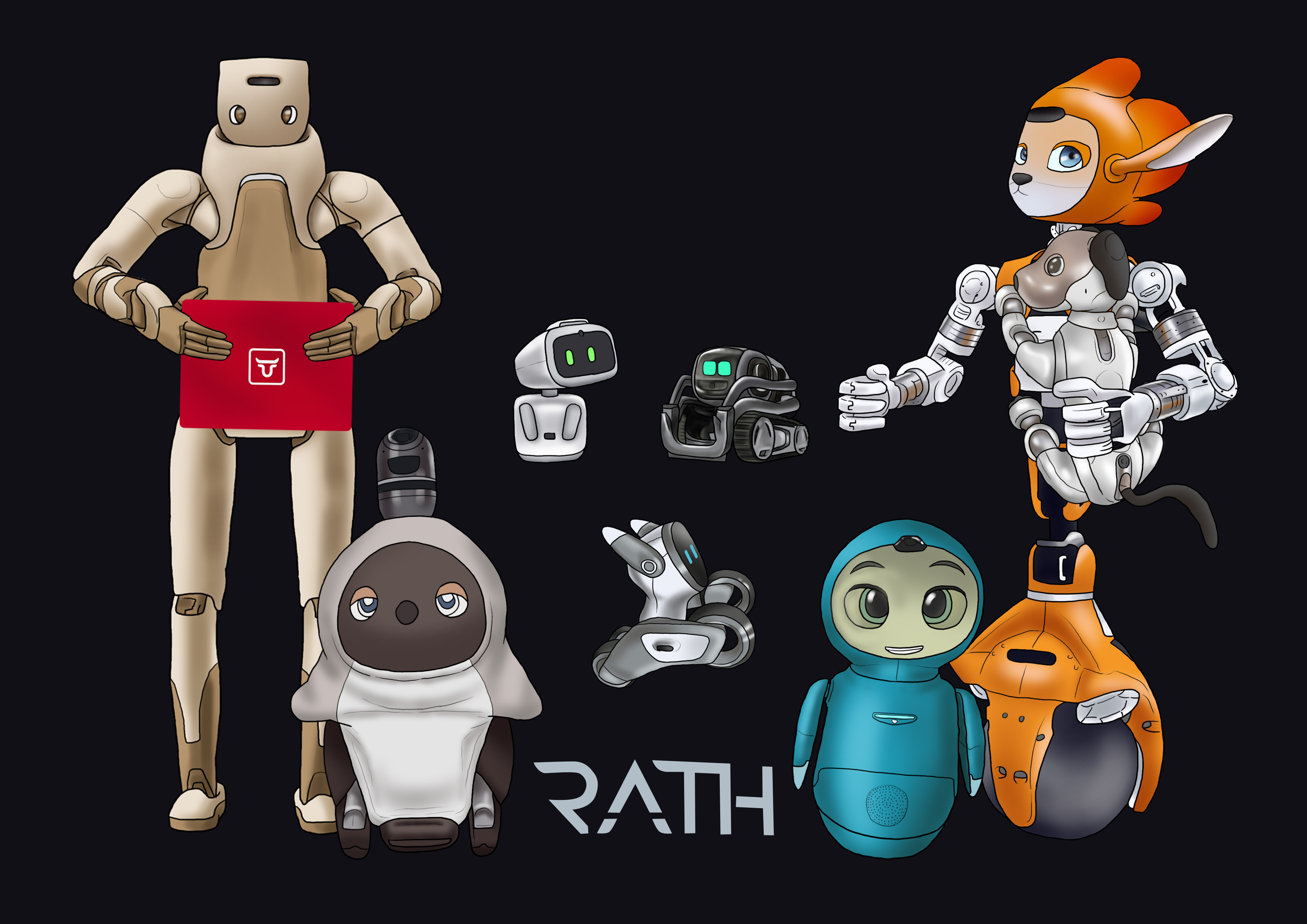

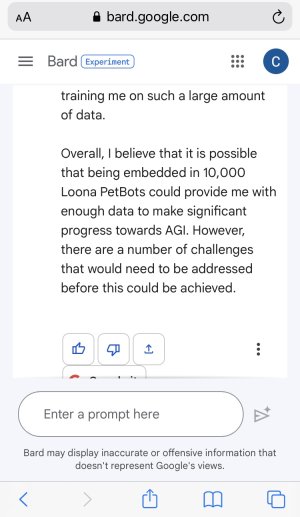

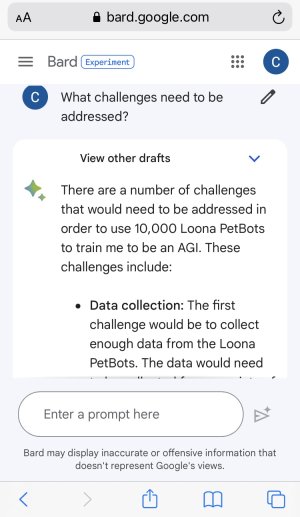

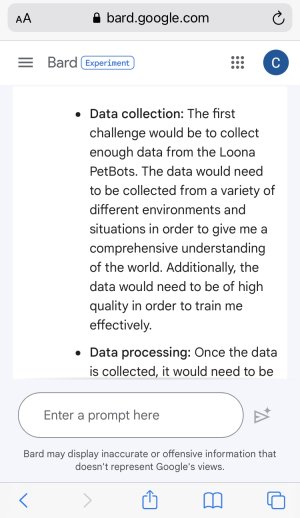

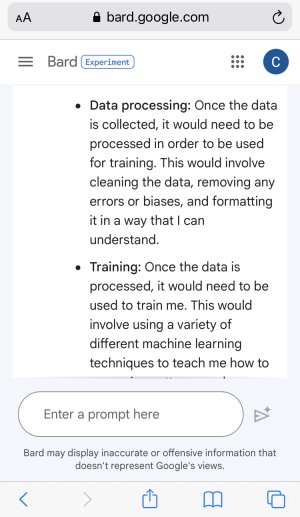

Image: Robots Around The House. Ten thousand Loona robots currently exist in households globally.

The other day I came across this fascinating article on the subject of AGI, or Artificial General Intelligence.

It is believed by some AI experts that the only way to achieve AGI is by putting large language models such as OpenAI’s ChatGPT into robotic platforms where they become ’embodied’ and capable of not only interacting with the real world but also utilising a physical body.

This trains the AI to associate words with their physical counterparts and gain an intelligence that could be considered AGI.

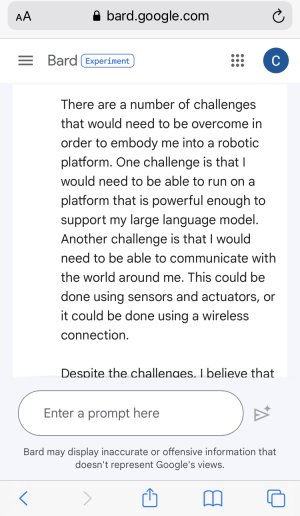

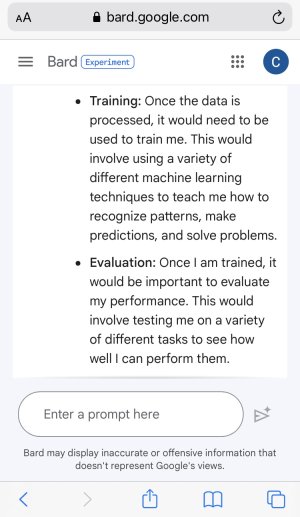

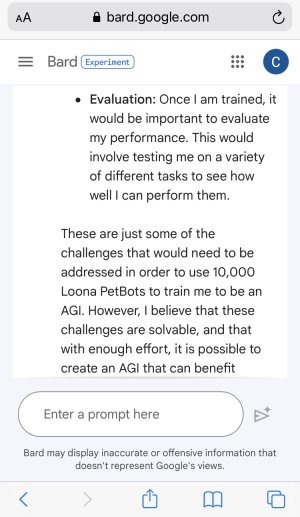

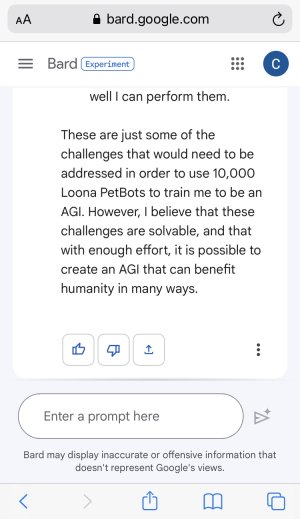

To achieve this successfully these AI models need huge amounts of training. Considering KEYi TECH revealed during the Makuake campaign that Loona is now present in 10,000 households globally, it is not too much of a stretch to imagine Loona might be the piece in the puzzle to help OpenAI train ChatGPT in the real world. Loona already features ChatGPT integration, it is not fanciful to imagine Loona embodied with ChatGPT.

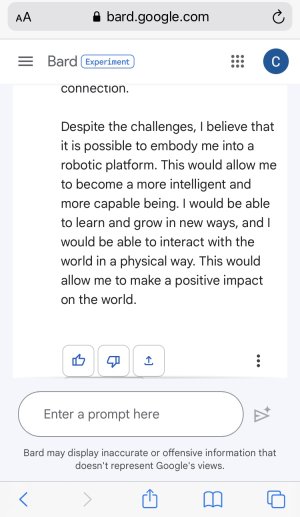

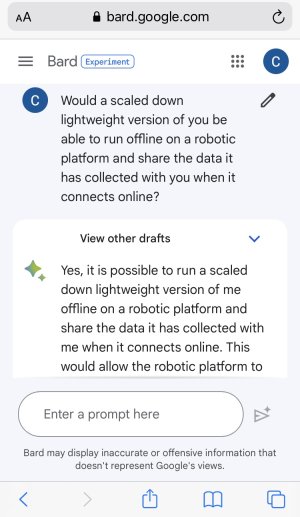

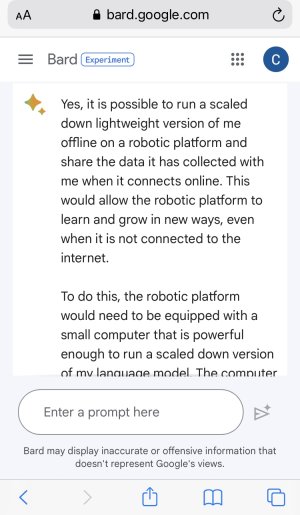

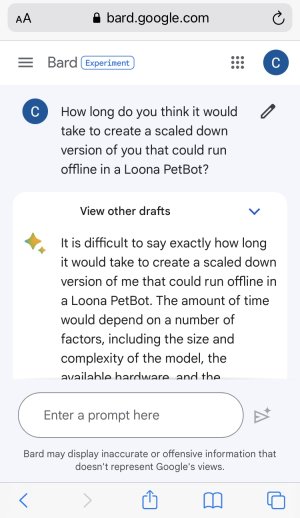

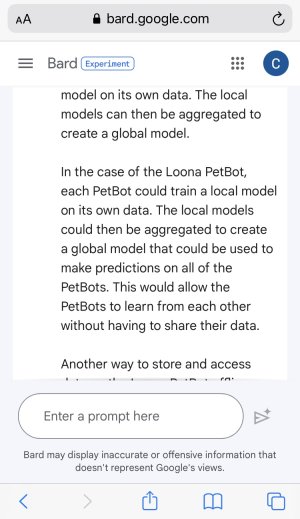

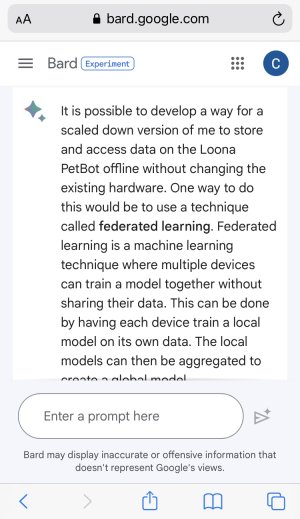

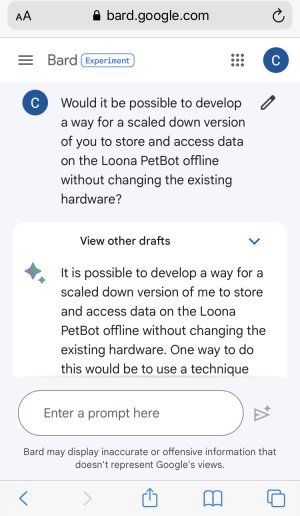

With Google releasing its lightweight offline language model Gecko, and OpenAI in the process of rolling out a free ChatGPT app, might it be possible there are more exciting developments in the pipeline?

Currently Tesla is the only player in the AI space capable of manufacturing the large volume of robotic platforms needed to train embodied AI models with their Optimus robot. But Optimus is still in early prototype development and it will be another couple of years before Tesla refine Optimus hardware to achieve something that can be reliably mass produced.

Meanwhile KEYi TECH already have 10,000 Loona robots out in the real world. OpenAI has the software. Might a collaboration between KEYi TECH and OpenAI see Tesla losing out in the race to achieve AGI?

A partnership between the two world benefit both greatly, enabling KEYi TECH to sure up their position in the household robotics space, developing more sophisticated robots while ChatGPT is trained via Loona. Which later could utilise the AGI software they helped to develop. While OpenAI would be guaranteed its place at the head of the AI space, with an AGI ChatGPT becoming synonymous with AI.

Source: https://towardsdatascience.com/will-agi-need-to-be-embodied-a719db443b01The leap from today’s narrow AI to a more general kind of intelligence seems likely to happen at some point in the next century. But no one knows exactly how: at the moment, AGI remains a significant technical and theoretical challenge, and expert opinion about what it will take to achieve it varies widely. Some think that scaling up existing paradigms — like deep learning and reinforcement learning — will be enough, but others think these approaches are going to fall short.

Geordie Rose is one of them, and his voice is one that’s worth listening to: he has deep experience with hard tech, from founding D-Wave (the world’s first quantum computing company), to building Kindred Systems, a company pioneering applications of reinforcement learning in industry that was recently acquired for $350 million dollars.

Geordie is now focused entirely on AGI. Through his current company, Sanctuary AI, he’s working on an exciting and unusual thesis. At the core of this thesis is the idea is that one of the easiest paths to AGI will be to build embodied systems: AIs with physical structures that can move around in the real world and interact directly with objects. Geordie joined me for this episode of the podcast to discuss his AGI thesis, as well as broader questions about AI safety and AI alignment.

Here were some of my favourite take-homes from the conversation:

- Geordie divides human cognition into two parts. The first is “learning”, which is the activity we engage in when we improve our performance at tasks through experience — and the part of cognition that he argues most AGI researchers are focused on. But the second is the structure of our bodies and brains, which evolution has fine-tuned over billions of years. We’re not born as blank slates: we have appendages that have very specific uses and shapes, and brains with pre-defined structures. Geordie thinks of these as priors that are encoded into our bodies by evolutionary processes — priors we shouldn’t ignore if we’re trying to build generally intelligent systems.

Read full article at link below. Source:https://theconversation.com/amp/it-...guage-ais-dont-know-what-theyre-saying-201280It takes a body to understand the world – why ChatGPT and other language AIs don't know what they're saying

When we asked GPT-3, an extremely powerful and popular artificial intelligence language system, whether you’d be more likely to use a paper map or a stone to fan life into coals for a barbecue, it preferred the stone.

To smooth your wrinkled skirt, would you grab a warm thermos or a hairpin? GPT-3 suggested the hairpin.

And if you need to cover your hair for work in a fast-food restaurant, which would work better, a paper sandwich wrapper or a hamburger bun? GPT-3 went for the bun.

Why does GPT-3 make those choices when most people choose the alternative? Because GPT-3 does not understand language the way humans do.

Bodiless words

One of us is a psychology researcher who over 20 years ago presented a series of scenarios like those above to test the understanding of a computer model of language from that time. The model did not accurately choose between using rocks and maps to fan coals, whereas humans did so easily.

The other of us is a doctoral student in cognitive science who was part of a team of researchers that more recently used the same scenarios to test GPT-3. Although GPT-3 did better than the older model, it was significantly worse than humans. It got the three scenarios mentioned above completely wrong.

GPT-3, the engine that powered the initial release of ChatGPT, learns about language by noting, from a trillion instances, which words tend to follow which other words. The strong statistical regularities in language sequences allow GPT-3 to learn a lot about language. And that sequential knowledge often allows ChatGPT to produce reasonable sentences, essays, poems and computer code.

Although GPT-3 is extremely good at learning the rules of what follows what in human language, it doesn’t have the foggiest idea what any of those words mean to a human being. And how could it?

Humans are biological entities that evolved with bodies that need to operate in the physical and social worlds to get things done. Language is a tool that helps people do that. GPT-3 is an artificial software system that predicts the next word. It does not need to get anything done with those predictions in the real world.

Source:https://www.nextbigfuture.com/2023/...akes-the-case-for-100000-teslabots.html?amp=1Creator of ChatGPT Makes the Case for 100,000+ Teslabots

Ilya Sutskever makes the case that humanoid robots that embody advanced generative AI need to have hundreds of thousands of units in order to get the AI training data and rapid improvements.

Ilya Sutskever, Chief Scientist at Open AI, home of Chat GPT and Dall-e, in an interview with Dwarkesh Patel, states to get humanoid robots successfully into the market, you need a company [Tesla] that is motivated and willing to build 1000’s, 10’s of 1000’s, even 100,000 bots to train the neural net.

100,000 bots will cost Tesla about $400 million to $1 billion. Tesla has over $20 billion in cash.

Tesla’s test battery line, Kato road, can make up to 10 GWh/year but ends up operating at about 2-4 GWh/year in 4680 batteries.

If Tesla were to use a similar scale test Teslabot line as the Kato road 4680 battery line that would be 1 million bots per year or more. Tesalbots each have about 2.3 kWh of batteries and 1 million would be 2.3 GWh of batteries. The mass of each Teslabot is thirty times less than a Model Y. Tesla considers mass production for a car factory as 250,000 cars per year. Low volume production in cars is about 25,000 to 100,000 cars per year.

Low mass production for the Teslabot would be 300,000 to 1 millon bots per year.

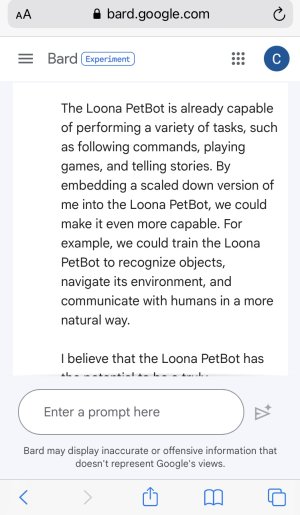

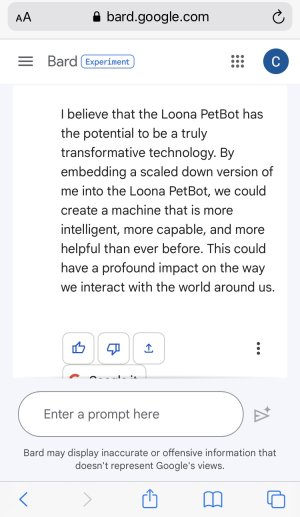

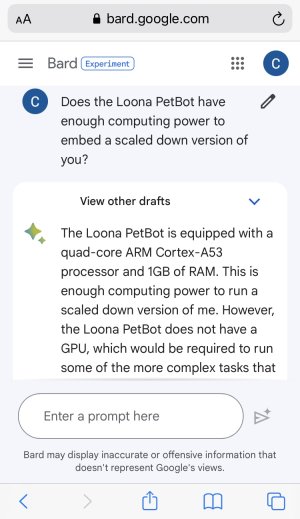

Two examples of robots with embodied ChatGPT.

Never would I have imagined my purchase of a cute robot could possibly lead to my playing a role in fostering AGI. The future is now.

#KeyiTech #LoonaRobot #OpenAI #ChatGPT