This article describes the phenomenon known as embodied LLM’s that allows Large Language Models or LLM’s such as ChatGPT to control physical robotic bodies by writing their own code in response to various stimulus in real time; enabling interaction with the physical world and bypassing the need for a human operator to write code for specific scenarios.

Read more here:https://analyticsindiamag.com/llm-the-linguistic-link-that-connects-humans-and-robots/

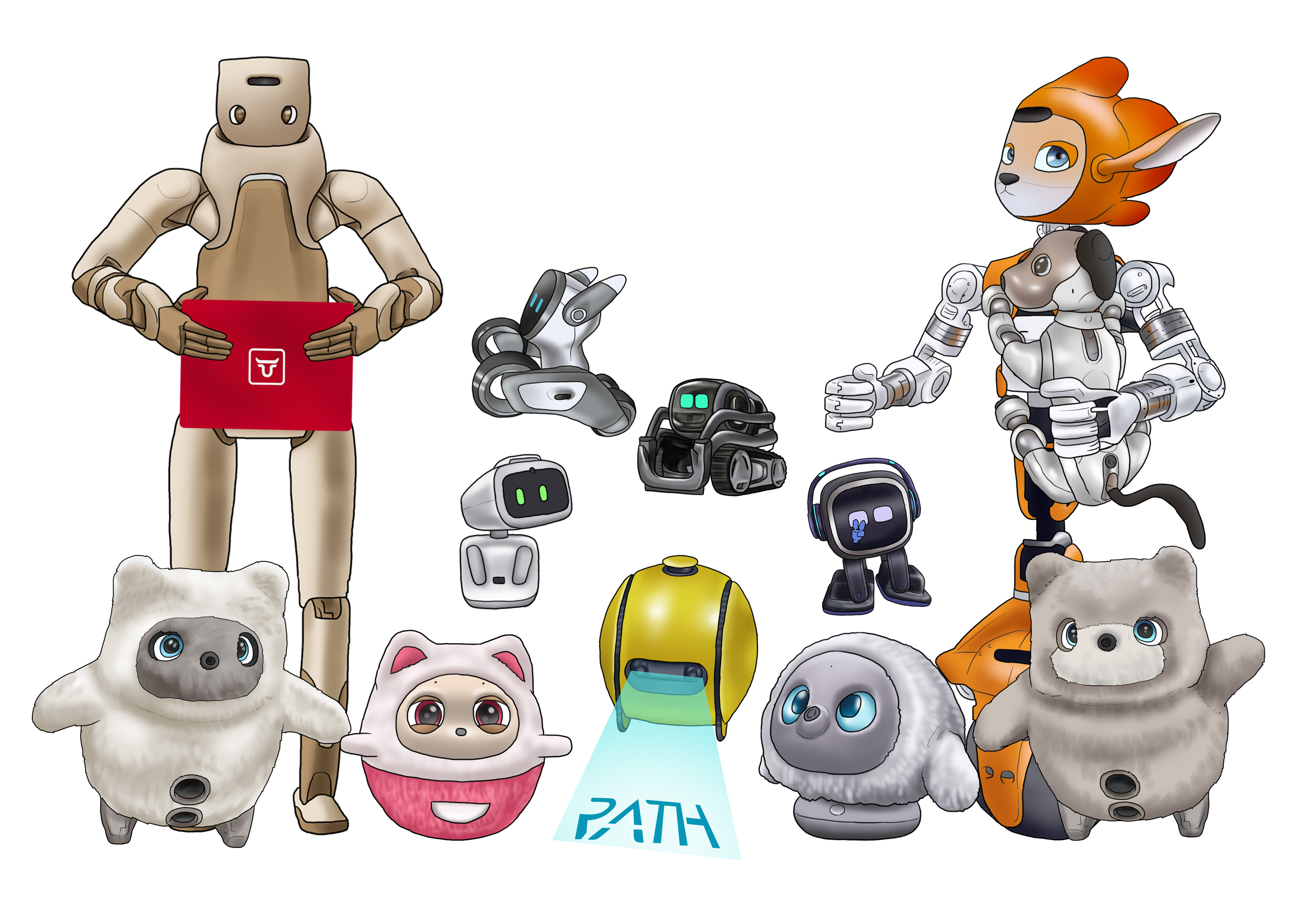

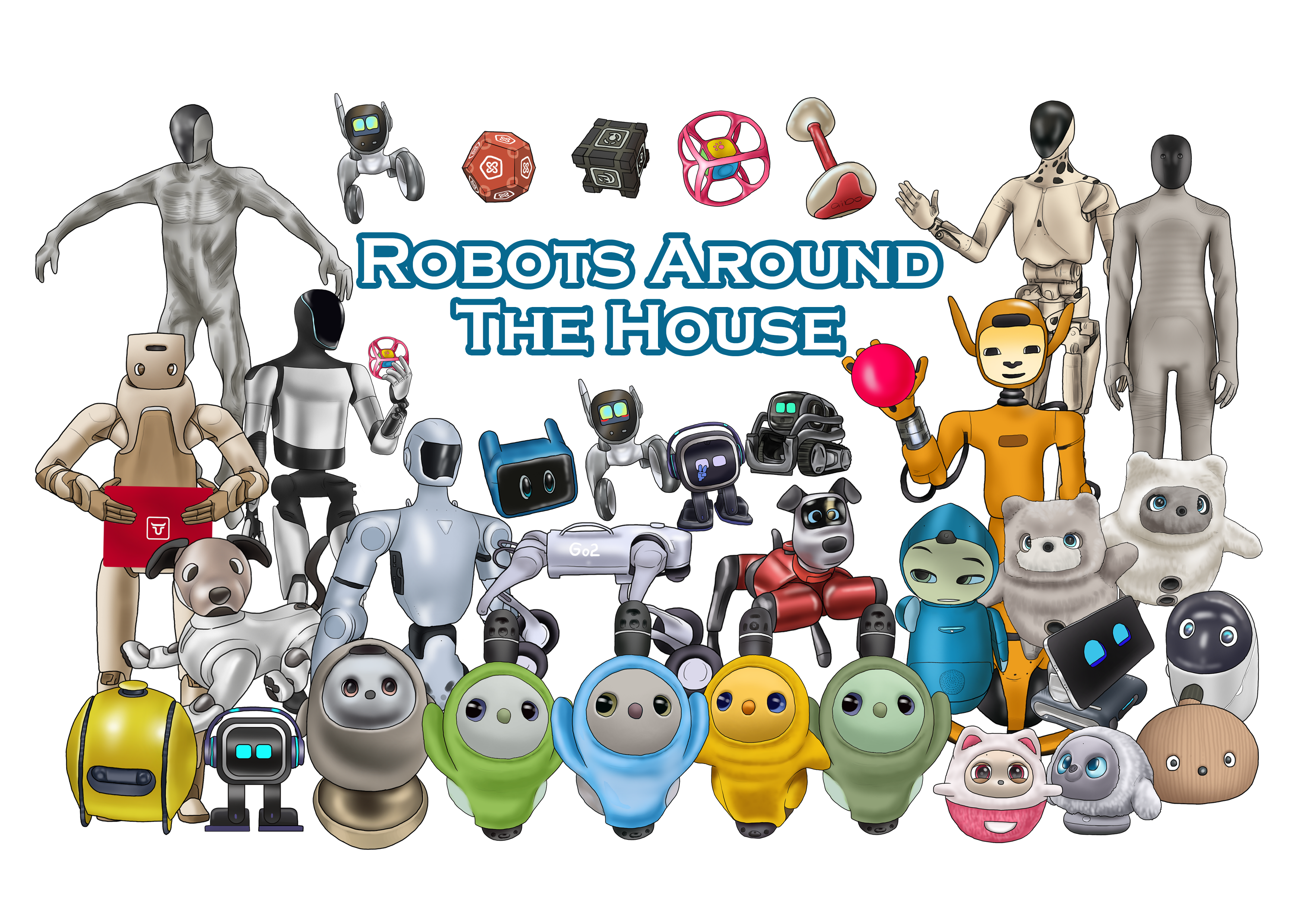

Here is an example of an embodied LLM running in the Vector robot.

LLM: The Linguistic Link that Connects Humans and Robots

ChatGPT is expanding with its use cases getting increasingly sophisticated. In barely three months since the launch, the chatbot broke the hype cycle, reached the maturity stage and was gradually turning into a monotonous talking machine, when Microsoft found a new case for it — robotics.

Researchers from the Redmond-based giant found that the LLM chatbot can be used to control drones and robotics. They were able to give the robots commands in simple English, using ChatGPT’s NLP capabilities to transcribe them to code.

Using LLMs for robotics

Microsoft’s research paper began with the idea that ChatGPT’s language capabilities can go beyond just text. Combining multiple approaches like special prompting structures, human feedback via text, and various APIs, the team was able to elevate ChatGPT’s abilities and bring them into the physical world.

In order to bring ChatGPT’s capabilities to robots, the researchers started by creating a task-relevant robot API library. This library is created with descriptive text functions that can convey the functionality of the code to ChatGPT. Using a series of engineered prompts, the chatbot is primed to give commands to the bot in a way that can be understood by the API.

ChatGPT then takes the input from the user, processes it through the engineered set of prompts, and sends the output to the user, who provides feedback to ChatGPT regarding the quality of the output code. When the user is happy with the code, it is pushed to the robot. The API then converts the commands into physical commands for the drone to follow.

Using this method, the researchers were able to provide commands to a drone, such as ‘find out where I can warm up my lunch’, and ‘make a Microsoft logo with the provided blocks’. In a simulated environment, this method was used to make a drone inspect each blade of a windmill.

The most interesting aspect of this research is that this method can be improved with further training of ChatGPT, or similar algorithms like Codex. The researchers found that a curriculum could be created to train ChatGPT to do more complex tasks. This means that even those without in-depth technical knowledge can interact closely with robots, further bridging the gap between humans and machines.

How exactly will LLMs do it?

Communication between humans and robots has long involved an operator familiar with the inner workings of the robot, including its structure and the code required to make it run. This, in turn, means that any functionality of the robot must either be coded into it with an additional UI for communication, or that the operator must be in the loop to iterate on and design new features for the robot.

However, plugging in an NLP model solves a majority of these problems. While a human is still needed to monitor the model, the operator’s job is taken by the model. In the example of Microsoft’s research, the programmers were able to build a tool that helps ChatGPT convert human language to machine code. Termed PromptCraft, this open source platform aims to be a repository of the prompting strategies that work in this scenario.

This approach allows robot operators to control them without sitting ‘in the loop’ of iterative deployment and improvement, instead allowing them to be ‘on the loop’ and influence the setup from the outside looking in.

Read more here:https://analyticsindiamag.com/llm-the-linguistic-link-that-connects-humans-and-robots/

Here is an example of an embodied LLM running in the Vector robot.