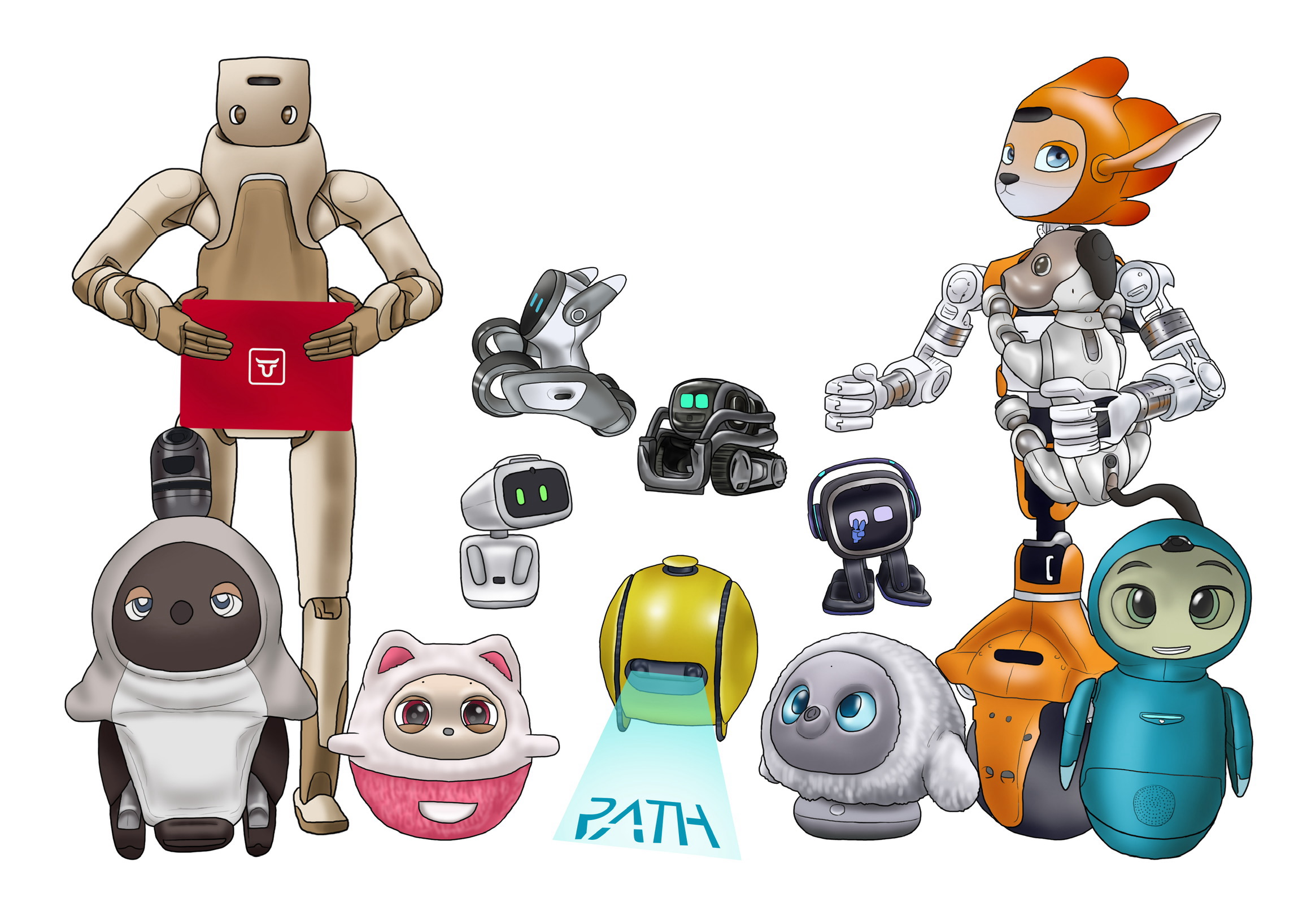

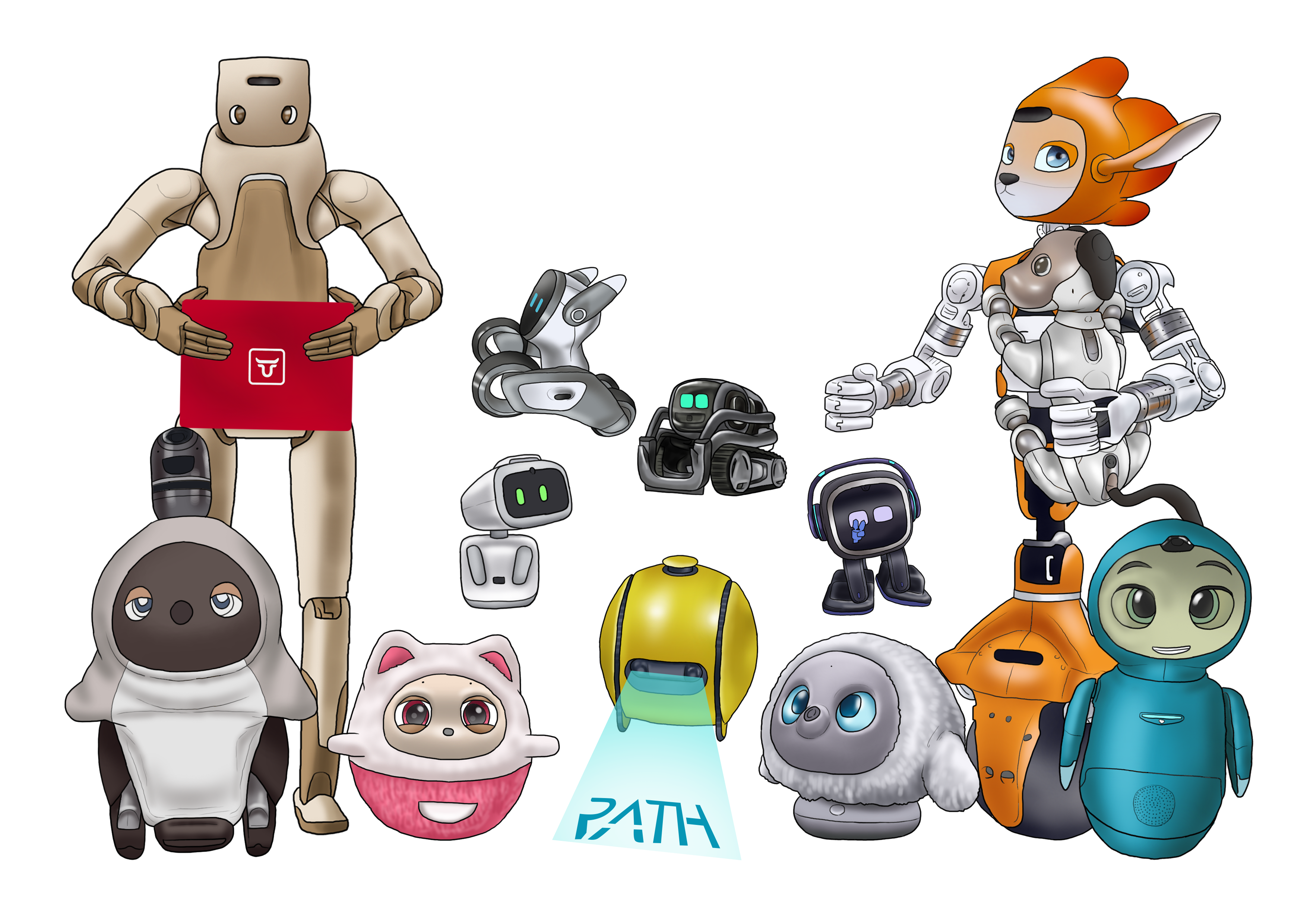

Image: Living AI. Aibi is finally unveiled.

Image: Living AI. Aibi rotating endlessly while tracking the movement of two coins.

Image: Living AI. Here we see Aibi’s back and what could possibly be two lower back sensors. Notice there could also be a sensor under the arm.

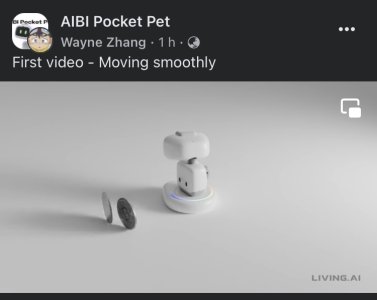

Living AI has finally released footage showing their robot Aibi in full detail!

The video shows two coins fall into Aibi’s line of sight. Aibi seems to have great vision as is very responsive to the coins falling into its line of sight and manages to keep track of the coins as they are rotating. Aibi proceeds to rotate 360 degrees on a base, which doubles as the charging station.

We see Aibi’s lower body for the first time and Aibi has possibly two sensors on its lower back and one on its lower front.

Aibi has incredibly fast movements and reaction times. Aibi is able to track the coins with a large degree of freedom in the head with the fast fluid movements creating an incredibly lifelike robot that is very expressive.

Aibi makes cute robotic sounds and shows expressive elements on its face. For instance when the coins went out of view and it could no longer see them a question mark popped up on its face to communicate confusion.

The charging base also has a light display in the form of a ring of light which is sure to add even more expressiveness to Aibi.

What we know so far:

• Aibi’s charger gives it the ability of unlimited 360 degree rotation.

• Aibi will be available for pre-order “in a few days.”

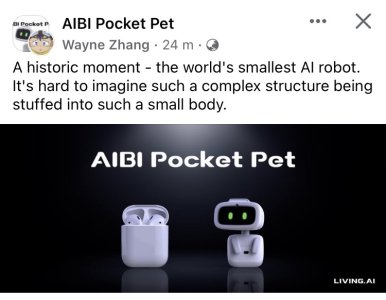

• Aibi seems to be quite small if the coins are a good indicator of scale.

• Although this is only a CGI animation, Living AI have said Aibi is not a prototype but that the production version has already been created and that we will see live footage of the production version soon.

Watch the video of Aibi here:

AIBI Pocket Pet Official | First video - Moving smoothly | Facebook

First video - Moving smoothly

www.facebook.com

www.facebook.com